Dynamic Scaling: Adapting Infrastructure to Varying Workloads

For medium to high-load projects, Kubernetes is considered the industry standard for orchestrating containerized applications. Organizations rely on it because it automates deployments, scaling, and recovery, reducing manual intervention and operational overhead and making it an effective solution for demanding workloads.

However, Kubernetes itself doesn't handle infrastructure provisioning or node management — these aspects require additional tooling.

AWS offers multiple solutions, including AWS Cluster Autoscaler, which provides a managed control plane, and Karpenter, an open-source dynamic auto-scaler. While both help manage Kubernetes infrastructure, they serve different roles.

In this article, we'll compare their approaches, strengths, and trade-offs to determine which is better suited for specific use cases.

What is Scaling?

Imagine you are using Opigno LMS to support 10,000+ users. At 2 PM, you have 1,000 online users; by 5 PM, the number grows to 1,500; and by 3 AM, it drops to 400. Your system must adjust accordingly, scaling up resources when demand spikes and reducing excess capacity during off-peak hours to minimize costs.

In Kubernetes, scaling infrastructure involves adjusting the number of pods, nodes, and clusters based on resource requirements. Each of these components plays a crucial role in handling application workloads:

Pods: The smallest deployable units in Kubernetes, each containing one or more containers that share networking and storage.

Nodes: The worker machines (physical or virtual) that run pods. Each node has limited CPU and memory resources.

Clusters: A collection of nodes managed together, allowing Kubernetes to distribute workloads efficiently.

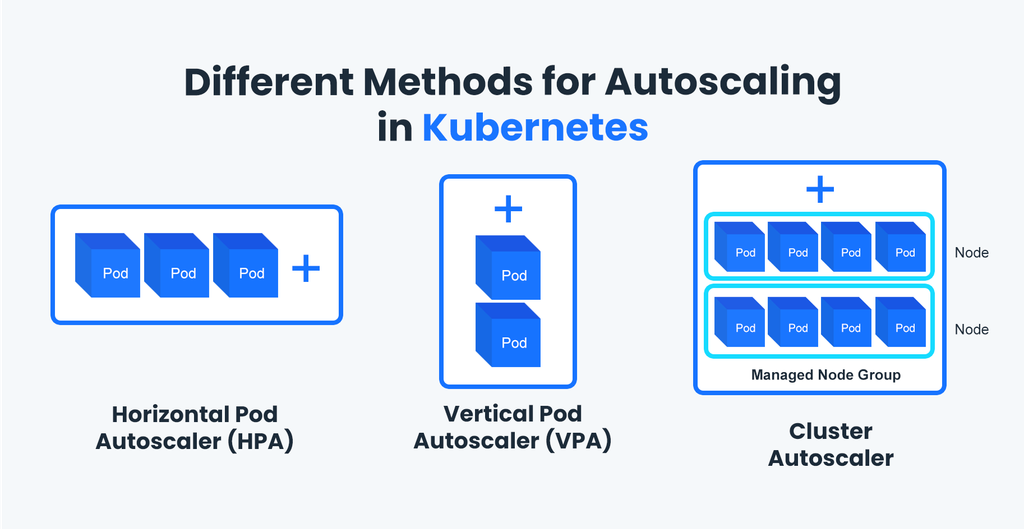

Scaling can be approached at pod and node levels, depending on the bottleneck:

Horizontal Pod Autoscaler (HPA) automatically adjusts the number of running pods based on CPU or memory usage or custom metrics like request count. When the load increases, HPA creates more replicas of a pod to handle the traffic. When demand drops, excess pods are removed to save resources.

Vertical Pod Autoscaler (VPA) adjusts the CPU and memory allocations of existing pods. Instead of adding more pods, Kubernetes increases the resources available to each one.

Node scaling provides new nodes with sufficient CPU and memory when current nodes have no resources to run more pods. There are two primary tools for node scaling: Cluster Autoscaler and Karpenter. Both tools ensure that Kubernetes scales not just pods but the entire infrastructure, ensuring applications remain performant while keeping costs under control.

AWS Cluster Autoscaler

The AWS Cluster Autoscaler is a native Kubernetes component that automatically adjusts the number of nodes in a cluster based on the resource requirements of the running pods. It helps maintain optimal performance by ensuring that the cluster has enough capacity to meet the needs of all pods without overprovisioning resources.

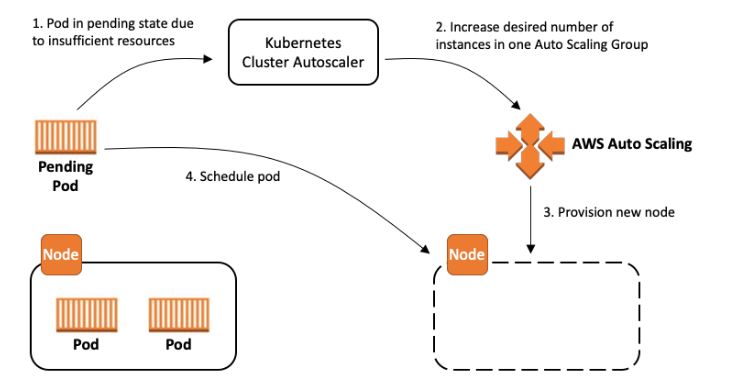

The Cluster Autoscaler integrates directly with Auto Scaling Groups (ASGs) or Managed Node Groups in Amazon Elastic Kubernetes Service (EKS). This way, Kubernetes can scale the underlying infrastructure dynamically in response to changes in workload.

When a new pod is created, Kubernetes checks if there's a node that can accommodate it based on several factors, including CPU and memory it needs to run, node restrictions, etc. If a pod meets the requirements and can be assigned to a node, it is "scheduled."

When a pod cannot be scheduled, the Cluster Autoscaler detects these unschedulable pods and triggers cluster scaling by adding or removing nodes from the pre-configured Auto Scaling Groups or Managed Node Groups in EKS.

As scaling remains within the boundaries of predefined node groups, Cluster Autoscaler provides a simple and effective way to manage node scaling. However, it cannot assess the most cost-efficient instance types or real-time workload and dynamically optimize instance types based on custom CPU, memory, or other requirements.

Karpenter

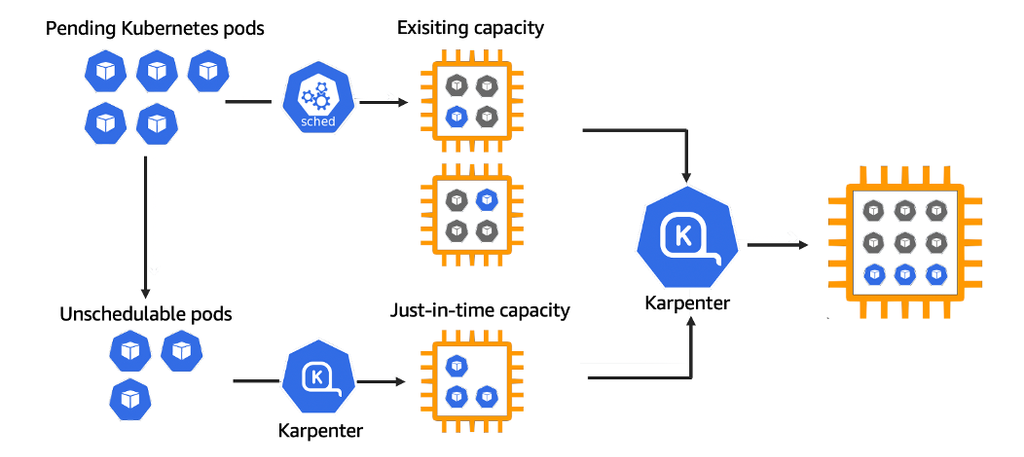

Karpenter is a flexible autoscaling solution designed by Amazon to improve how Kubernetes clusters scale nodes. Unlike the Cluster Autoscaler, Karpenter interacts directly with the cloud provider's API (e.g., AWS EC2) to provision and optimize nodes in real-time based on current workload demands, making it a more dynamic and cost-efficient alternative.

With Karpenter, you don't need to specify the exact number of nodes.

When unschedulable pods are detected due to resource constraints, it provisions new nodes with the most suitable and cost-effective instance type based on real-time resource needs. Plus, Karpenter reduces delays in handling traffic spikes, as it provisions nodes in seconds rather than minutes.

And when the demand goes down, Karpenter optimizes pod placement via consolidation — rescheduling pods to fewer nodes — and automatically terminates unused nodes. Minimizing the number of active nodes helps distribute pods efficiently and removes unnecessary cloud expenses.

All this accounts for greater flexibility and more efficient resource allocation compared to the Cluster Autoscaler and makes Karpenter particularly effective for applications with unpredictable or highly variable workloads, such as e-learning platforms, media streaming services, or SaaS applications experiencing fluctuating user traffic.

Optimizing Cloud Infrastructure Requires Continuous Expertise

Scaling Kubernetes clusters may seem straightforward, but cloud infrastructure is full of pitfalls, from inefficient resource allocation to unexpected costs and performance bottlenecks. Not all of them can be solved with Cluster Autoscaler and Karpenter.

Maintaining an optimized, cost-effective setup requires continuous monitoring, analysis, and fine-tuning to keep up with changing workloads, pricing fluctuations, and evolving cloud provider capabilities. Our experts can design, implement, and continuously optimize your Kubernetes scaling strategy to ensure a resilient, efficient, cost-effective cloud environment. Let's build a smarter infrastructure together — contact us today!